Making academics compete for funding does not lead to better science

New study challenges accepted science policy that more competitive funding and powerful top-down university management is the best way to boost the quality of science produced.

Universities and science policy makers across the world often subscribe to the mantra that more competitive funding and more powerful, top-down, university management are the recipe for boosting the quality of science and getting their research published in the highest ranked journals.

This has become part of today’s accepted science policy, but in our recent study, we show that these views are far from supported by evidence.

By comparing data from 17 countries, we found that while more spending produces more high-quality science, increasing competition for resources does not.

In fact, competitiveness of funding and research quality are negatively correlated.

What’s more, we found that top-down management of universities did not boost efficiency or scientific quality or research.

So, is it time to rethink how we produce more, higher quality research?

Science systems differ around the world

Researchers and policymakers tend to compare the scientific performance across various countries by looking at the ratio of public spending in research and development (input) to the number of published scientific articles they produced (output). They might also consider how often their articles are cited by other scientists.

However, simply dividing outputs by funding is unlikely to give an accurate portrait of the efficiency of a research system, as there is so much variation between how universities operate from one country to another.

For example, countries organise and administer their research budgets, finance PhD projects as students or employees, and pay for facilities and buildings in very different ways.

Furthermore, while in theory the R&D expenditure data are collected and organised in the same way everywhere, in practice this is not the case. Factors like these make R&D spending levels quite useless for comparing the performance of research from one country to another.

Read More: Scientists: There is too much focus on positive results

Alternative view

We propose an alternative way of comparing research systems internationally. Instead of looking at levels of spending per se, we focused on the relationship between changes in input (spending) and changes in high-quality outputs (as top 10 per cent most cited papers).

In other words, we compared how many additional top papers countries get out of each additional unit of spending on R&D.

This method has the advantage of sidestepping the structural and organisational differences between countries. The data are far from perfect, making definitive answers impossible at this stage. Nonetheless, we can use them to raise questions, challenge existing assumptions, and compare alternative theoretical approaches — the more so as traditional studies have strongly influenced current science policy decisions.

Wrong policy advice

Our analysis indicates that differences in overall funding accounts for nearly 70 per cent of the variation in highly cited scientific output between countries. In other words, spending more produces better science.

This leaves 30 per cent of the variation in scientific performance to be explained by factors other than funding. The obvious candidates already dominate research policy discussions:

Most science policy is at best fact-free but more probably harmful to the progress of science.

- The amount of competitive funding relative to core funding

- Whether the allocation of core funding is performance-based

- The degree of autonomy of the university (i.e. more or less top-down management)

- Academic freedom of researchers (i.e. autonomy in selecting research topics and approaches).

Accepted science policy suggests that performance goes up when a greater share of funding is obtained via competitive means. So, more funding going into competitive grant schemes leads to better research – at least that is the idea. This type of funding, where researchers apply for funds for a project, and a team of expert reviewers decide which projects should be funded, covers between 30 and 60 per cent of public research funding in the different countries.

The rest of research funding (direct, or block grant funding) can be distributed competitively (performance based) or not. And the common view is that performance-based funding for institutions boosts their overall performance.

It also assumes that scientific performance increases with the level of autonomy of university management, meaning their ability to make decisions on staffing, academic affairs, and spending without direction from the government.

In recent decades, these ideas have shaped research policy in most nations. As a result, the share of competitive project funding is increasing, as is the freedom of university management to control their own strategies and budgets. Increasingly, more countries have adopted a performance-based funding system for universities. These three interventions, it is claimed, make research more efficient.

Our research disagrees.

Read More: Norwegian academics face serious work-family life conflicts

Ex-post evaluation systems are OK, but competition has gone too far

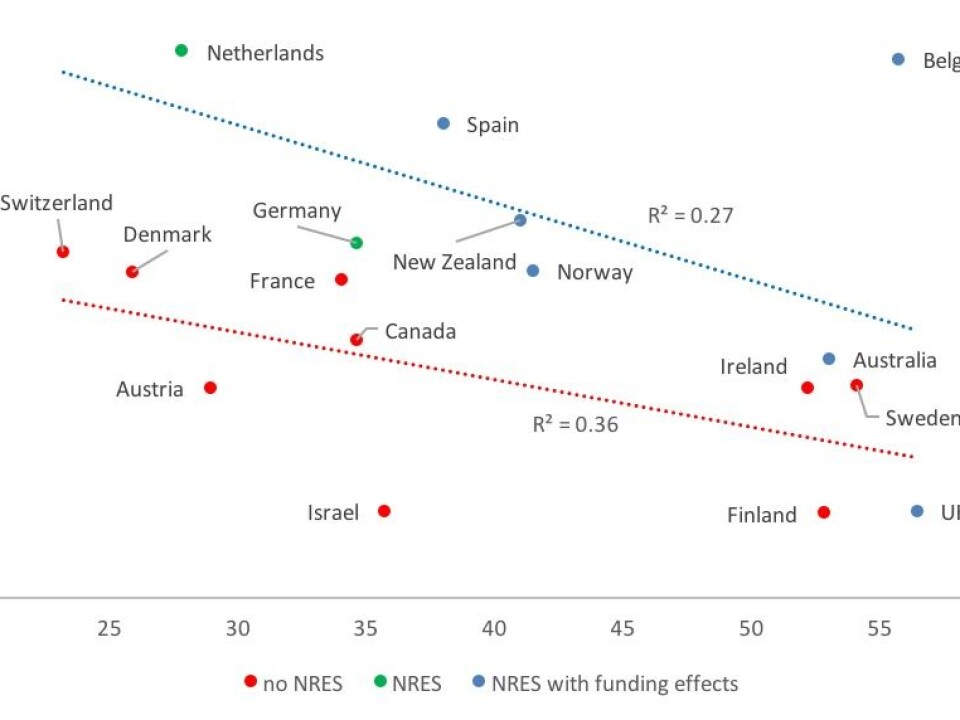

In our data, increasing competition for resources did not improve research efficiency and quality (as shown in the first graph, above). In fact, competitiveness, which is defined as the amount of competitively awarded project funding as a proportion of total R&D funding, and research quality were negatively correlated.

Countries that relied more on competitive sources of funding, such as the UK and Finland, show smaller gains in high quality output as they increased overall spending than countries with a high level of non-competitive, institutional funding, such as the Netherlands and Spain.

We also found that countries with a retrospective national research evaluation system (known as NRES--you can read more about this at the end of the article), where regularly expert committees evaluate the performance of research organisations of the previous five or so years, are considerably more efficient.

Autonomous management is detrimental to efficiency

Contrary to what is generally claimed, we saw no positive effect of university autonomy on research efficiency.

Financial autonomy of universities, measured as their degree of control over their long-term budgets, and a nation’s research performance do not correlate. What’s more, the other dimensions of university autonomy, such as control over staffing, teaching, governance, and work environment, correlate negatively with efficiency.

That is, the more freedom institutions have to make decisions in these areas without interference from government, the smaller the marginal gains from research spending.

The reason for this may lie in the relationship between institutional autonomy and academic freedom, as more autonomy for universities typically goes hand-in-hand with more power for managers at the expense of faculty.

Data on academic freedom are sparse, but those available show a negative correlation between academic freedom and managerial autonomy at university level.

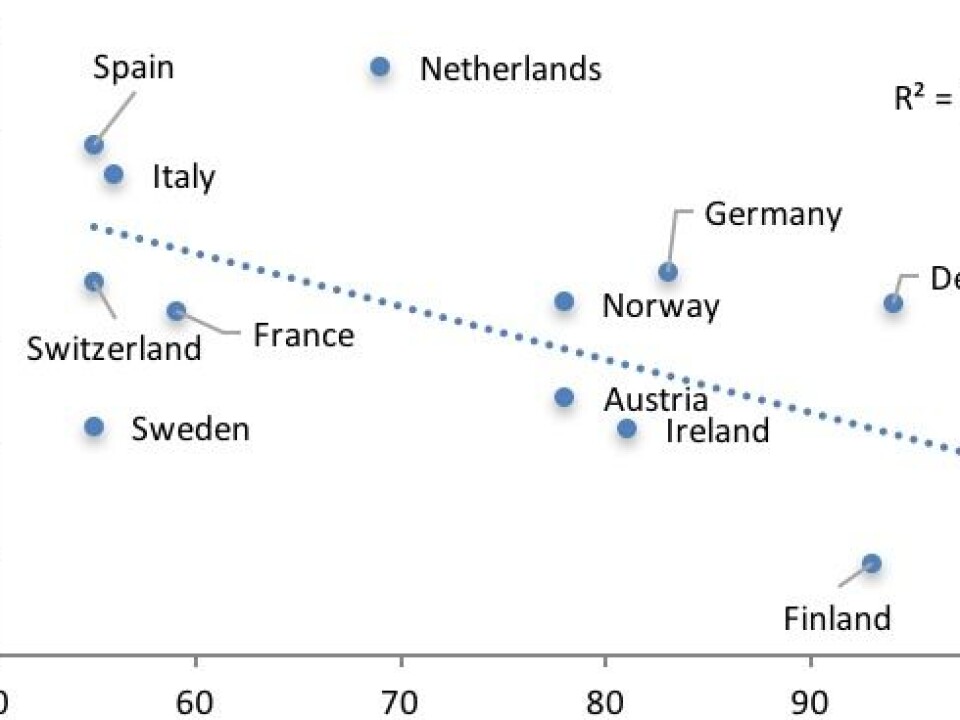

Consequently, more autonomy may result in less academic freedom and this again may result in a lower quality of research, as shown in the second graph above. In this graph, the horizontal axis gives the autonomy score of countries – the higher the score the larger the organisational autonomy; the Y-axis shows the level of efficiency. The figure shows that the higher the autonomy, the lower efficiency. The correlation is fairly strong: 0.58.

Read More: Basic research crisis? Many results cannot be replicated

Towards characteristics of efficient science systems

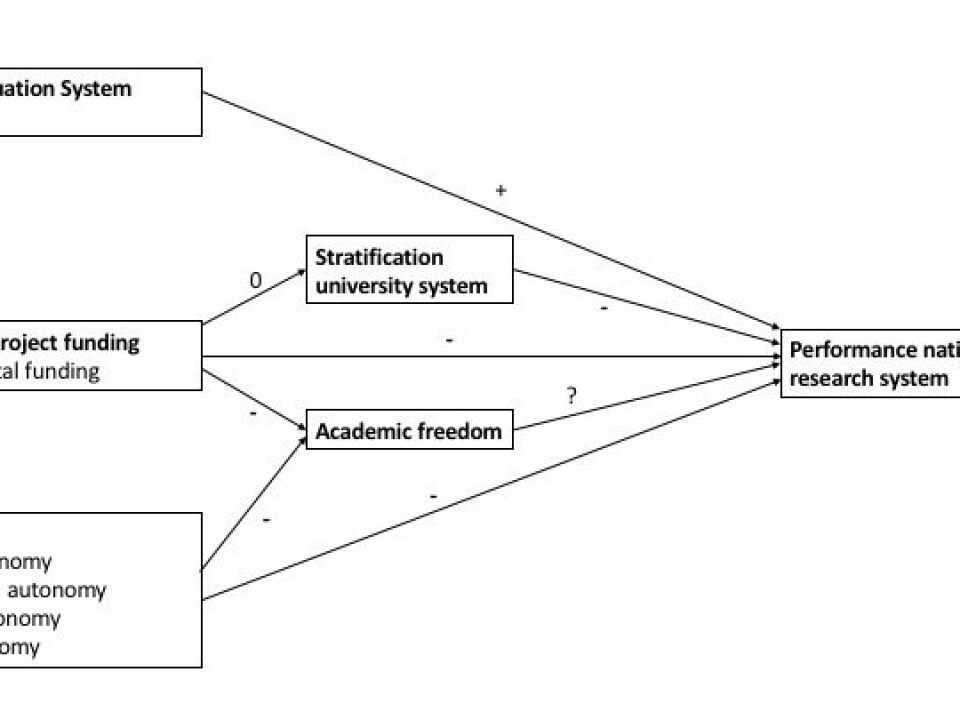

Well-functioning, efficient, science systems seem to be characterised by a research evaluation system that evaluates the work done in the past, combined with high institutional funding, and relatively limited university autonomy in the form of managerial power.

Less efficient systems seem to have strong focus on control, through some combination of a high level of competitive project funding (which controls what researchers are going to do) and powerful university management (which controls even more). These relationships are summarized in the third figure above.

It is worth reiterating that the paucity and quality of the data make these conclusions somewhat provisional and that better data are needed from more countries. Even more important is to include other types of output beyond highly cited papers, to see how returns to society depend on institutional and structural characteristics.

Overall, we would claim that most of science policy is hardly evidence based, and that received wisdom is at best fact-free but more probably harmful to the progress of science.

The characteristics we found for the better performing systems would probably suit as a superior alternative to a roadmap for future science policy advice.

---------------

Read this article in Danish at ForskerZonen, part of Videnskab.dk