Researchers' Zone:

Computers must learn to make mistakes

As human beings, sometimes we do not know enough to make a decision. And, when it comes to computer systems, we actually ought to develop the same uncertainty.

The development of artificial intelligence (AI) has concentrated on teaching the computer how to get it right. For the past 20-30 years, the aim has been to make neural networks increasingly better at performing correct predictions.

As researchers, we have focused on getting AI systems to perform tasks autonomously and with precision.

But we have only given a second thought to teaching AI systems that they cannot be certain about everything.

For example, if an AI is right 95 percent of the time, it will still maintain that it is right in the other 5 percent of cases. It will adamantly claim to be right because it has not learnt to be wrong.

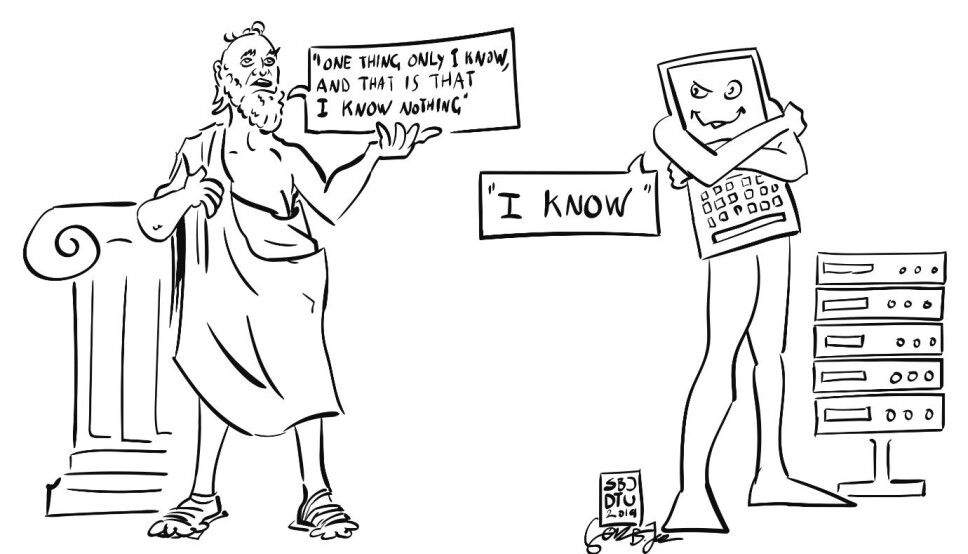

With his famous quote 'all I know is that I know nothing', Socrates already taught us thousands of years ago how important the recognition of our lack of knowledge was, exactly in order to gain knowledge.

Computers do not understand that the weather is not always perfect

Perhaps our artificial neural networks can learn from Socrates's insights; you cannot know everything, and sometimes you do not have sufficient information to make a decision.

Artificial intelligence is simply too self-assured in its conclusions and does not recognise that in some areas it does not have the necessary knowledge to act.

This is basically equivalent to an artificial intelligence that, for example, has to drive an automated car after only having been trained to drive on a straight, main road in full sun, yet it assumes it can drive on small, windy roads through sleet and wind.

Rather than the computer pausing and saying: 'I cannot drive here, I do not have the sufficient information or experience,' it simply drives as if it were on a straight main road in perfect weather.

But, of course, this can go terribly wrong.

AI researchers need to focus more on uncertainty

As researchers, we have been obsessed with looking for the positive and not the negative results. Therefore, most AI systems have not learnt that it is beneficial to recognise that sometimes you do not know enough to make a decision.

In other words, uncertainty can be a virtue. The opposite of uncertainty is not certainty but blind conviction, and that can prove fatal when artificial intelligence begins to act and interact in our physical world.

This is what happened when Elaine Herzberg stepped out onto a four-lane road with her bicycle at 10 o'clock in the evening in Tempe, Arizona. In doing so, her life ended abruptly, and she also wrote herself into world history.

She was, in fact, the first person to be hit and killed by an automated car.

What was Elaine?

By examining data leading up to the fatal accident, it was clear that the AI was not capable of correctly determining what Elaine Herberg was. Was she a car? Was she a bicycle? Or was she something completely different?

The system stubbornly categorised her ‘correctly’ and was therefore unable to predict her pattern of movement. This lack of proper classification should have caused the system to break.

But instead, the car just continued. It was, in other words, not sufficiently uncertain about its own predictions.

Certainty is only one part of the decision-making process. We have not paid sufficient attention to the importance of uncertainty and unpredictability.

If, on the contrary, the system had been trained to include uncertainty as a determining factor in the decision-making process, the artificial intelligence would have known what to do with this lack of knowledge.

But it did not know, so the car continued driving the permitted 60 kilometres per hour, killing Elaine Herzberg.

Insufficient knowledge is important

The rapidly accelerating development of artificial intelligence has been spurred on by the desire to get it right as often as possible.

If an AI is right 85 percent of the time, it is rated as being better than if it is right 80 percent of the time.

In the short term, this approach has been excellent, but we must gradually acknowledge that learning is not just about being right: it is just as much about acknowledging your own uncertainties.

It is precisely this acknowledgement that we have succeeded in making an AI include in its calculus at DTU Compute in a paper we presented in Canada.

Data is key

The one-sided focus on getting it right has been a necessary evil to push forward and accelerate the development of artificial intelligence, but it is evident that this focus falls short when we engage these thinking systems in more complex human-like tasks.

It is therefore only natural that we increasingly find analogies in human thinking when artificial intelligence has to be trained to act and make decisions in our society.

By focusing solely on teaching the systems to get it right, we have in principle harvested the low-hanging fruit. In order to reach further up the tree, we have to introduce higher levels of complexity in what we teach the systems.

The crucial key to artificial intelligence is data. Computer systems are excellent in terms of analysing huge amounts of data in a very short amount of time, but now we have to teach the systems that even if there is data present, it may not be sufficient data, and then the lack of a result is actually better than a wrong result.

How, then, do we teach it that?

Artificial intelligence can learn like children

Once a child has learnt to identify a dog, it will quickly insist that all four-legged animals are dogs. If you tell the child that this particular four-legged animal is actually a cat, all four-legged animals quickly start turning into cats in the child's view.

The same behaviour is also seen in artificial intelligence.

A great help for the child is not only to see many dogs and cats but to see them close to each other in time, so that small differences are more present to the memory.

Interestingly, the same trick turns out to be decisive when an AI has to be made familiar with its own uncertainty.

If, during training, we display similar data to the artificial intelligence, the computer can at the same time see the natural variance found in the data. This clarifies that the blind confidence approach is inadequate.

We are making progress

We have just demonstrated that this simple trick works wonders when AI systems have to be trained.

In our experiments, we observed, among other things, great improvements when an AI is trained ‘actively’, that is, when it collects data itself while it is being trained.

Here, uncertainty is essential since it allows the computer to request more data where the uncertainty is the greatest.

As existing systems have lacked an understanding of their own uncertainties, they have, thereby, also not had an understanding of where more data could be most useful.

Fundamentally, it is not really that surprising that good learning requires critical self-awareness and therefore also an understanding of one's own uncertainty.

Researchers and manufacturers of artificial intelligence systems have now been given better opportunities to work with uncertainty.

The new findings were presented at the Conference 'Advances in Neural Information Processing Systems (NeurIPS)' in Vancouver on December 11, 2019. The corresponding article is available online. The work was funded by the Villum Foundation and the European Research Council.

Sources

Martin Jørgensen’s profile (DTU)

Nicki Skafte Detlefsen’s profile (DTU)

'Vehicle Automation Report'. National Transportation Safety Board (2018).

'Reliable training and estimation of variance networks'. NIPS (2019).

———