Statistics yield better results from climate models

Nordic researchers are creating more realistic forecasts of Earth’s future climate by collaborating with statisticians. Now they’re encouraging other climate researchers to do the same.

The point of climate models is to predict future climate developments. Using mathematical formulas, computer programs are fed information about wind, temperature and other measurements such as carbon dioxide levels, sea ice and cloud formation.

The goal is for the programs to serve as a model of the globe that can tell us how our planet will behave in the future. Politicians use these future scenarios, called projections, as the basis for making environmental decisions.

However, many have criticized the models because they haven’t provided reliable results. The Nordic research network, called the Nordic eScience Globalization Initiative (NeGI), may have found the solution for obtaining more accurate results: they are now including statisticians to help interpret the results that the models generate.

Complexity multiplies uncertainty

The climate models have gradually become more complex as computers have become faster and more powerful. The first climate models contained mathematical descriptions of the atmosphere, sea, land and sea ice, and later, researchers supplied information on how various substances move in the atmosphere, such as CO2 and water vapour.

The latest versions of the models include information about ice cover, sea level and local weather developments over a long period. The resolution of the models has also increased.

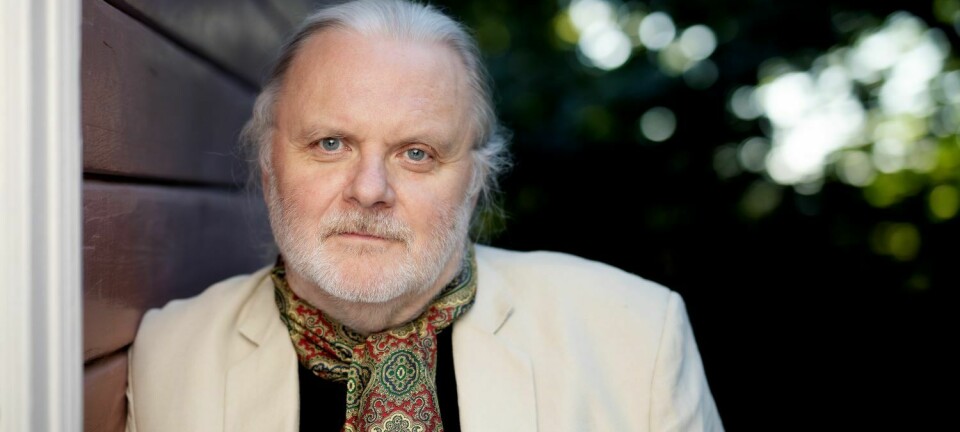

Rasmus Benestad at the Norwegian Meteorological Institute is one of the researchers behind an article published in the Nature Climate Science journal in September. The researchers compare climate model resolution with digital image resolution.

"Imagine the earth being covered by a network of small squares," says Benestad. “Each square is like a pixel in a digital image. The models figure out how the climate works in each square. We improved our weather reporting models by having more and smaller squares, which increased the image resolution.

Climate researchers use historical data going back hundreds of years to test how well their models are able to predict future temperatures on the Earth's surface. So far, the model projections for the last century have proven consistent with the way the climate actually was.

But the climate models for this century haven’t been consistent with what we know has happened.

Much warmer, much faster?

The models predicted that the past 20 years would become much warmer at a much faster rate than has turned out to be the case. It is also difficult to create mathematical formulas that describe how extreme weather and water vapour in the form of clouds and rain affect temperature.

This unreliability becomes problematic when climate scientists need to give politicians and bureaucrats a basis for deciding on choices for the future.

Benestad says it's important to provide the most accurate advice possible about what local climate change we can expect in the coming decades. Realistic forecasts are critical when “scientists are consulted about what crops farmers should choose or whether it makes sense to invest in a ski resort given the projected climate developments.”

Researchers usually run simulations multiple times with slightly different settings, which yield varying future scenarios. They don’t know how things will actually develop, but they can observe which computer scenarios turn up the most often and which results are almost the same. Since computer capabilities have increased in recent years, researchers have begun to compare results from many different climate models and hope that this will provide more reliable projections.

Kjell Stordahl, who creates statistical models for Telenor, compares this method to an orchestra, where each musician concentrates on playing his own instrument. Together, the musicians create a more complete sound image where the weaknesses of each musician don’t predominate.

Simulating multiple processes – such as how water vapour or ice cover behaves – and comparing results from several models increase the uncertainty of the results. Benestad says that the statistical methods used by climate scientists up to now have been quite simple, but researchers have found that computer projections match known realities more closely when using more advanced statistical methods.

Meteorologists and physicists have dominated climate research. Benestad and his NeGI colleagues believe that the results will become more credible and reliable if statisticians assist climate scientists in evaluating the certainty level of their research results with figures that others can verify afterwards.

Historical coincidence

American statistician Peter Bloomfield believes the reasons so few statisticians have been involved in research on climate models are both historical and organizational. He is Professor Emeritus of North Carolina State University and is a member of the Advisory Committee on Climate Research in the American Association for Statisticians (ASA).

"ASA tried to figure out how many statisticians had been involved in the report to the UN’s Intergovernmental Panel on Climate Change (IPCC) ten years ago when they received the Nobel Peace Prize," he said.

"They found about a dozen statisticians among the several thousand researchers who were somehow involved in writing the final report.”

Bloomfield believes that many more statisticians are involved now compared to ten years ago. Yet, the cooperation between statisticians and climate researchers remains random and short-lived. Only during the past five years has it come into focus that the models are like a jigsaw puzzle of how the climate works – with a lot of puzzle pieces still missing. The scenarios have big pixels and the picture isn’t complete.

Admitting that the results are uncertain because the models provide an incomplete simulation is a big step, he says, and “this has been swept under the rug for years."

Research policy guidelines and funding are other reasons that so few statisticians have been doing climate research.

Bloomfield says most statisticians have financed their work in the pharmaceutical industry, where they make models that predict how medicines will behave in the body. Those who have spent time on climate research have done it on their own time because they’re personally interested.

He appreciates the input from the Nordic research network, which aligns well with ASA's initiative to involve more statisticians in the UN climate panel. ACA’s Advisory Committee on Climate Research is planning special meetings to this end at next year's joint statistical meetings in Vancouver, which is the largest statistics conference in North America.

"It’s important to show the uncertainty in computer models with numbers that can be verified," Bloomfield says. “Climate models are a key candidate for this. Numerous articles have been published about quantifying uncertainty in recent years, and Benestad's article is completely in line with this work."

Turbulent path forward

The path forward will be turbulent, despite Benestad and Bloomfield’s optimism. The Nordic research network is closing its doors next year, with no clear plans for how their experience will be continued at the international level.

In September, the IPCC launched the sixth assessment cycle of reviewing what climate scientists have found since the last cycle in 2014. Quantifying model uncertainty is not included in the work plans.

The World Meteorological Organization (WMO) has a working group for climate research. They systematically work to improve research methods by comparing the results of different models. WMO has begun a new review called CMIP6, but they are using old methods that do not include statisticians.

"CMIP6 has tried to make their methods more consistent across projects," said Benestad. The working group used advanced statistical methods that aren’t as common among researchers who don’t specialize in statistics.

Another challenging factor is the Trump administration's deprioritization of geophysical research. The National Center for Atmospheric Research is a federal research centre that provides American universities with access to expensive research resources, such as powerful computing clusters, model simulations and a plane that collects information about what is happening in the sky. Despite drastic budget cuts and deprioritizing support for statistical and mathematical methods, Benestad remains optimistic.

"With more efficient statistical methods, we can extract more useful information from the large amounts of data generated by climate models," says Benestad. “That will give us a clearer picture of what we can expect in the future.”

Reference:

Gregory Flato and Jochem Marotzke et al.: Evaluation of Climate Models. In: Climate Change 2013: The Physical Science Basis. Contribution of Working Group I to the Fifth Assessment Report of the Intergovernmental Panel on Climate Change, Cambridge University Press

——————————————————-

Read the Norwegian version of this article at forskning.no

Scientific links

- R. Benestad et al.: "New vigour involving statisticians to overcome ensemble fatigue", Nature Climate Change 7, 9 September 2017, doi:10.1038/nclimate3393 Summary

- Flato, G. et al.: Evaluation of Climate Models. In: Climate Change 2013: The Physical Science Basis. Contribution of Working Group I to the Fifth Assessment Report of the Intergovernmental Panel on Climate Change, Cambridge University Press